Program Overview

The Master's Program in Big Data Engineering & Cloud Computing offered by Digicrome is designed to equip professionals with advanced skills and knowledge in managing and leveraging large-scale data and cloud computing technologies. This comprehensive program integrates theoretical learning with practical hands-on experience, preparing participants to excel in roles such as Big Data Engineers and Cloud Architects.

Key Focus Areas:

Big Data Engineering: Gain expertise in handling and processing large volumes of data using cutting-edge technologies like Hadoop, Spark, and Kafka. Learn data modeling, data warehousing, and optimization techniques crucial for effective data management.

Cloud Computing: Explore cloud platforms such as AWS, Azure, and Google Cloud. Master cloud architecture, deployment models, and services essential for designing scalable, secure, and cost-effective cloud solutions.

Data Analytics and Machine Learning: Develop skills in data analysis, predictive modeling, and machine learning algorithms. Understand how to extract valuable insights from data to drive business decisions and innovation.

DevOps and Containerization: Learn DevOps practices for continuous integration and deployment (CI/CD) in cloud environments. Acquire proficiency in containerization technologies like Docker and Kubernetes for efficient application deployment and management.

Program Structure:

- Duration: Flexible, typically spanning 11 Months.

- Internship: 3 Months live program

- Learning Modes: Blend of online lectures, hands-on labs, and projects.

- Capstone Project: Apply acquired skills to solve real-world challenges in big data and cloud computing.

Career Opportunities: Graduates of this program can pursue roles such as Big Data Engineer, Cloud Architect, Data Scientist, Machine Learning Engineer, and DevOps Engineer across various industries including technology, finance, healthcare, and e-commerce.

Why Choose Digicrome?

- Industry-aligned curriculum developed in collaboration with experts.

- Practical, hands-on learning approach with industry projects and case studies.

- Access to a global network of professionals and mentors.

- Certification upon successful completion, validating expertise in big data engineering and cloud computing.

Join Digicrome's Master's Program in Big Data Engineering & Cloud Computing to advance your career in the rapidly evolving fields of big data and cloud technology. Contact us today to embark on your journey towards becoming a skilled professional in this dynamic domain.

Master's Program in Big Data Engineering & Cloud Computing

- ₹299000.00

Features

- 11-Months Live Online Program

- Next Batch 31/08/2024

- Guaranteed Job Placement Aid*

- 50+ Industry Projects

Key Highlights

11-Months Live Online Program

11-Months Live Online Program Next Batch 31/08/2024

Next Batch 31/08/2024 Guaranteed Job Placement Aid*

Guaranteed Job Placement Aid*

50+ Industry Projects

50+ Industry Projects

03-Months Internship Program

03-Months Internship Program

1 Month Job Interviews Preparation

1 Month Job Interviews Preparation

Expert Experienced Trainers

Expert Experienced Trainers

24*7 Career Support

24*7 Career Support

Program Objective

1 Introduction to Python Programming - Basics of Python, Data Types, Print and Input functions

2 Data Structures in Python - Different Methods of Each Data Structure

3 Control Statements, Loops and Functions in Python

4 Object Oriented Programming - Classes and Objects, Inheritance, etc.

1 Advanced Numerical Python - Numpy Tutorial

2 Data Cleaning and Wrangling with Pandas - Advanced Pandas Tutorial

3 Exploratory Data Analysis using Matplotlib and Seaborn

4 Basic and Advanced Web Scraping using BeautifulSoup

5 Basic and Advanced Web Scraping using Selenium

1 Introduction to Databases - (What are Databases), (What is MySQL), (What is RDBMS), (RDBMS v/s NoSQL)

2 Data Base Workflows - Understanding Entity Relationship Diagram, Understanding Normalization (1NF, 2NF, 3NF, BCNF)

3 Structured Query Language (SQL) - CRUD Operations

4 Data Aggregation Functions - (GroupBy), (OrderBy), (HAVING), (COUNT, SUM, MIN, MAX, AVG)

5 Joins in SQL - Primary Key and Foreign Key, Constraints, Set Operations, DML - Savepoint, Rollback

1 Introduction and Overview - (Understanding Repositories, Branches, and Commits), (Setting up Git and GitHub)

2 Versions Control with Git, Repository Management, and Collaboration

3 Workflow Automation with GitHub - GitHub Actions, Automating CI/CD Pipelines for Data Engineering Projects

4 Security and Compliance in GitHub - Managing Access Permissions, GitHub Pages, Documentation and Wikis

5 Integrating GitHub with other tools and Cloud Platforms

6 Advanced Techniques - Rebasing and Cherry picking Commits, Git for Automated tasks, Stashing changes, and using the reflog

0 Installation and Configuration

1 Introduction to MongoDB - Overview, Applications and Use Cases, Relational Databases vs Document Oriented Databases

2 MongoDB Architecture - (Core Concepts - Documents, Collections, Databases), (JSON & BSON Data Formats), (MongoDB Server), (Replica Sets and Sharding)

3 NoSQL Queries - CRUD Operations, Querying Documents

4 Data Modeling - Schema, Design, Embedding vs Referencing, Arrays & Nested Documents, Indexing Strategies

5 Aggregation Framework - Introduction, Pipeline Stages - $map $group $Project etc., Aggregation Transformation and Analysis, Performance Tuning

6 Transactions and Concurrency - ACID transactions, Section-based transactions, Optimistic Concurrency Control

7 MongoDB Indexing - Creation and Management of Indexes, Types of Indexes

8 Replication and High Availability - Setting up and managing Replica Sets, Automatic Failover and Recovery, Read and Write Concerns in Replica Sets

9 Sharding for Scalability - Introduction, Shard Keys and Chunk Distribution, Balancing and Mitigating Chunks, Managing Sharded Clusters

10 Advanced MongoDB Concepts

10.1 Authentication and Authorization for Data Security, Role-based access control, Data Encryption, Auditing and Logging

10.2 Performance Tuning - MongoDB Atlas, Ops Manager, Profiting and Optimizing Queries, Resource Management and Capacity Planning

10.3 MongoDB with different Programming Languages, Integration with Big Data Tools - Spark & Hadoop, Using MongoDB and BI tools for Data Visualization

0 Installation and Configuration

1 Introduction to Elasticsearch - Use Cases, Core Features, Elasticsearch vs Other NoSQL Databases

2 Elasticsearch Architecture - Cluster, Nodes, Indices, Shards, Replicas, how data is stored in JSON documents, Role of Mappings

3 Data Modelling and Ingestion

3.1 Schema Design, Nested and Parent-Child Relationships

3.2 Using Rest APIs for CRUD Operations, Bulk API for effectively indexing large volumes of data

3.3 Integration with tools like Logstah, Beats, Apache Kafka, etc. for ingesting data

4 Search and Querying - Query DSL (Domain Specific Language), Search APIs for basic and complex queries, Filters and Aggregations

5 Miscellaneous

5.1 Data Management - Index Management, Aliases, Reindexing

5.2 Performance Optimization - Indexing Performance, Search Performance, Shard Allocation

5.3 Monitoring and Maintenance - (Monitoring Tools like Kabana, Elastic Stack Monitoring, etc.), Cluster Health, Backup and Restore

5.4 Security - Authentication and Authorization, Role-based access control, SSL/TLS for secure data communication

6 Advanced techniques using Elasticsearch

6.1 Real-Time Data Analytics and Monitoring, Data Pipelines, Log Management and Event Data Analysis

6.2 Machine Learning for Anomaly Detection and Predictive Analytics

6.3 Geo Spatial Queries, Scripting and Extention

0 Installation and Configuration

1 Introduction to Apache Hadoop - Overview, History, Use Cases for Big Data

2 Hadoop Architecture - HDFS, YARN, MapReduce, Data Replication and Block Management, How does Hadoop achieve fault tolerance?

3 Hadoop Distributed File System (HDFS) - Architecture, File operations, Data Replication, Control and Security in HDFS

4 Yet Another Resource Negotiator (YARN) - Architecture, Resource Allocation and Management, Job Scheduling and Monitoring

5 MapReduce Model - (Basics - Mapper, Reducer, Combiner, Partitioner), Running and Writing MapReduce Jobs, Optimization Techniques

6 Data Ingestion and ETL Processes

6.1 Apache Sqoop for Importing and Exporting Data

6.2 Apache Flume for Real-time Data

6.3 Apache Pig and Apache Hive for Data Transformation

7 Data Processing and Analytics - Apache Pig and Apache Hive, Integrating Apache Spark with Hadoop for Advanced Analytics

8 Hadoop Security - Authentication, Authorization, Encryption, Data Security at transit and rest, Role-based access control

9 Deployment, Monitoring, and Management in the Hadoop Ecosystem

9.1 Monitoring using Ambari and Cloudera

9.2 Collection and Analysis of Metrics

9.3 Scaling, Backup, and Recovery Practices

9.4 Troubleshooting and Debugging

10 Hadoop Ecosystem Advanced Concepts

10.1 HDFS Federation and High Availability, YARN Capacity and Fair Schedulers

10.2 Customizing and Fine Tuning MapReduce

10.3 Integration with Apache Kafka for Real-Time Data

10.4 Using Hadoop with Cloud Platforms - AWS, GoogleCloud, Microsoft Azure

0 Installation and Configuration

1 Introduction to Apache Spark - Overview, Key Theoretical concepts: RDD, DAG, SparkContext, SparkSession

2 Spark Architecture

2.1 Cluster Managers - Standalone, YARN, Mesos, Kubernetes

2.2 Executors, Workers, Job, Task, and Stage Concepts

2.3 How Spark achieve fault tolerance?

3 Spark Core Concepts - RDDs Working, Pair RDDs operations and usage, Broadcast variables and accumulators

4 Spark SQL - SQL Operations, DataFrames and Datasets, Interoperability b/w DataFrames and RDDs, Performance Optimization

5 Data Processing with Spark - ETL Processes, (Data Sources - HDFS, S3, Cassandra, HBase, JDBC), File formats

6 Spark Streaming - DStreams, Structured Streaming, Window Operations, Stateful Transformations, Checkpointing

7 Machine Learning with Spark

7.1 Pipelines, Transformers, Estimators

7.2 Feature Engineering with Spark

7.3 Classification, Clustering, Regression, Collaborative Filtering

8 Graph Processing - Creating and Transforming Graphs using GraphX, PageNet, Triangle Counting, Connected Components

9 Advanced Spark Concepts - Shuffles, Data Serialization, Performance Tuning and Configuration

10 Deployment using Spark - Debugging and Troubleshooting

0 Installation and Configuration

1 Introduction to Apache Hive - Overview, Use Cases in Real World, Comparison with SQL and RDBMS

2 Hive Architecture - (HiveQL, Metastore, Driver, Compiler, Execution Engine, and HiveServer2), Interaction of Hive with Hadoop

3 Hive Query Language (HiveQL)

3.1 Basics of HiveQL, Data Definition Language, Data Manipulation Language

3.2 Create, Read, Update, Delete, Insert operations

3.3 Querying Data in HiveQL - SELECT, JOIN Subqueries

3.4 Functions in HiveQL - User-Defined Functions, User-Defined Aggregate Functions

3.5 Windowing and Analytics Functions

3.6 Using Views and Indexes

3.7 Writing and Optimizing Complex Queries

4 Hive Data Modeling, Data Types, and File Formats

4.1 Partitioning and Bucketing

4.2 Table Properties and Constraints, External vs Managed Tables

4.3 Complex Data Types - Arrays, Maps, Structs

4.4 File Formats - TextFile, SequenceFile, Optimized Row Columnar (ORC), Parquet, Avro

5 Hive Security and Performance Tuning - Authentication, Authorization, Role-Based Access Control

6 Query Optimization Techniques, Indexing and Partition Pruning, Configuring and Tuning Hive Parameters

7 Data Ingestion and ETL Processes, Monitoring Hive Performance

8 Hive on Cloud Platforms - AWS EMR, GoogleCloud Dataproc, Azure HDInsight

0 Installation and Configuration

1 Introduction to Apache Kafka - Overview, History, Use Cases for Real-Time Data Processing

2 Kafka Architecture - (Brokers, Topics, Partitions, Replicas, Producers, Consumers, and ZooKeeper), How Kafka achieves fault tolerance?

3 Creating and Managing Topics in Kafka

4 Understanding Partitions and Replicas in Kafka

5 Producers and Consumers

5.1 Writing producers to send data to Kafka

5.2 Writing consumers to read data from Kafka

5.3 (Producer and consumer configurations), (Synchronous vs. asynchronous producer sends), (Consumer groups and message consumption)

6 Kafka Connect

6.1 Introduction to Kafka Connect - Setting up and configuring connectors

6.2 Source and Sink connectors, Custom connectors development

6.3 Common connectors for databases, file systems, and other data sources

7 Kafka Streams

7.1 Introduction to Kafka Streams API

7.2 Stream processing concepts: KStreams, KTables

7.3 Writing and deploying stream processing applications

7.4 Stateful processing and windowing operations

7.5 Interactive queries and materialized views

8 Schema Management with Confluent Schema Registry - Introduction, Registering and managing schemas, (Avra, Protobuf, and JSON Schemas)

9 Kafka Security, Monitoring, Management, Performance Tuning

10 Advanced Concepts in Apache Kafka

10.1 Kafka Transactions and exactly one semantics, Joins and Aggregation in Kafka Streams, Log Compaction, Handling Rebalances in Kafka Streams

10.2 Designing Pipelines with Kafka, Using Kafka for ETL Processes, Real-Time Data Transformation, Data Integration Patterns

10.3 Kafka Integration with Hadoop and Spark

10.4 Using Kafka with Data Warehouses - Amazon Redshift, Snowflake

10.5 Real-time Data Analytics with Kafka

10.6 Kafka Integration with Cloud (AWS, GoogleCloud, Microsoft Azure)

0 Installation and Configuration

1 Introduction to Apache Airflow - Overview, Use Cases, Comparison with other workflow orchestration tools

2 Airflow Architecture - Schedulers, Web Server, Worker, Metadata Database, Directed Acyclic Graphs, Task Instances and their states, Executors

3 Directed Acyclic Graphs (DAGs) - (Writing DAGs using Python), (Understanding Operators, tasks, and dependencies), (Built-in Operators), (Custom Operators and sensors)

4 Scheduling Workflows and Data Pipelines

4.1 Designing Efficient Workflows

4.2 Scheduling Complex Pipelines

4.3 Implementing ETL pipelines

4.4 Orchestrating data pipelines across multiple systems

4.5 Handling dependencies and data consistency

5 Scheduling and Triggering

5.1 Scheduling DAGs with cron expressions and time delta

5.2 Trigger rules and conditional task execution

5.3 Manual triggering of DAGs

5.4 Handling time zones and daylight saving time

6 Task Management and Execution

6.1 Task retries, SLA, and timeouts

6.2 Task dependencies and triggering rules

6.3 Parallel task execution and task queues

6.4 Handling task failures and retries

7 Airflow Plugins, Airflow UI, Security, Monitoring and Integrations

7.1 Creating and using Airflow Plugins

7.2 Navigating Airflow web interface

7.3 Authentication, Authorization, Role-based access control, Securing Airflow web server

7.4 Monitoring DAGs, Managing DAGs using Airflow CLI, Viewing Logs and Task Details

7.5 Integration of Airflow with Databases and Cloud Services, Using Hooks for External System Interactions

8 Advanced Level Apache Airflow Tutorial

8.1 Dynamic DAG generation, SubDAGs, Branching and conditional workflows

8.2 Optimizing Airflow Performance, Scaling Airflow with Celery and Kubernetes, Resource Management

8.3 Version Controlling DAGs and Configurations, Implementing CI/CD for Airflow DAGs, Testing and Validating before deployment

8.4 Integration with Cloud Services - AWS, GoogleCloud, Microsoft Azure

0 Installation and Configuration

1 Introduction and Overview to Docker, Benefits of using Docker for Data Engineering, (Key Concepts - Containers, Images, Docker Engine)

2 Working with Docker Images - Pulling Images from Docker Hub, Custom Docker Images using Dockerfiles

3 Docker Containers - Creating, Running, Stopping, Starting, Restarting, Inspecting, and Managing Docker Containers

4 Data Persistence in Docker - Managing data within containers, Docker Volumes for Persistent storage, Bind mounts vs volumes, Backup and Restore for Data Volumes

5 Docker Networking - (Bridge, Host, Overlay), Connecting Containers using Networks, Exposing and Publishing Container Ports, Network Security

6 Docker compose - (Multi Container applications with docker-compose.yml), (Docker compose for development, testing, and Production)

7 Applications of Docker in Data Engineering

7.1 Data Ingestion and ETL Processes

7.2 Data Processing and Analytics - Running Big Data Frameworks in Docker, Containerizing Jupyter Notebooks for Data Analysis

7.3 Database Management - Running Relational and NoSQL Databases like MySQL and MongoDB in Docker, Data Backup and Recovery for Databases

7.4 Machine Learning and Artificial Intelligence - Deploying ML & AI models in Docker, Docker for Reproducible ML Experiments

7.5 Distributed Systems - Distributed Data Pipelines using Docker, Orchestrating Distributed Systems with Kubernetes

0 Installation and Configuration

1 Introduction to Kubermetrics - Clusters, Nodes, Pods, Namespaces, Deployments, Services, ConfigMaps and Secrets, Benefits of Kubermetrics in Data Engineering

2 Storage Management - Persistent Volumes (PVs) and Persistent Volume Claims (PVCs), Stateful Sets and Storage Classes

3 Networking - Networking Fundamentals for Kubernetes, Network Policies, Controllers for Load Balancing and Routing

4 Advanced Kubermetrics Techniques

4.1 Integrating Kubermetrics with CI/CD Pipelines, Deploying Updates and Managing Rollbacks

4.2 Deploying Data Processing Frameworks on Kubernetes, Managing Databases in Kubernetes, Containerizing ETL Pipelines

4.3 Multicluster Management, Service Mesh for Microservices Management, Kubernetes Operators for Managing Complex Applications

5 Miscellaneous

5.1 Resource Management, Monitoring and Logging, Using Helm for Package Management

5.2 Role-based access control, Secrets Management, Pod Security

0 Installation and Configuration

1 Understanding Jenkins as a continuous integration and continuous delivery (CI/CD) tool.

2 Jobs and Pipelines in Jenkins

3 Version Control using Git and GitHub in Jenkins

4 Pipelines in Jenkins - Managing Dependencies and Sequential Tasks, Stages and Steps for Pipeline Workflows, Handling Errors in Pipelines

5 Building and Testing - Automating processes for Data Engineering Applications, Implementing Automated Testing, Jenkins agents and executors

6 Deployment and Release - Automating Deployment with Jenkins Pipelines, Continuous Deployment, Blue-Green Deployments and Canary Releases

7 Miscellaneous

7.1 Monitoring Jenkins Pipelines executions and build statuses, Configuring Email Notifications for Failures and successes in Pipelines, Pipeline Health Check

7.2 Role based access control, Securing Credentials and Sensitive Information in Jenkins

7.3 Optimizing Performance for Data Engineering Pipelines, Scaling Jenkins Infrastructure with Distributed Builds and Agents

7.4 Jenkins Plugins, Building Custom Jenkins Plugins, Automated Data Pipelines with Jenkins

0 Installation and Configuration

1 Understanding Jenkins as a continuous integration and continuous delivery (CI/CD) tool.

2 Jobs and Pipelines in Jenkins

3 Version Control using Git and GitHub in Jenkins

4 Pipelines in Jenkins - Managing Dependencies and Sequential Tasks, Stages and Steps for Pipeline Workflows, Handling Errors in Pipelines

5 Building and Testing - Automating processes for Data Engineering Applications, Implementing Automated Testing, Jenkins agents and executors

6 Deployment and Release - Automating Deployment with Jenkins Pipelines, Continuous Deployment, Blue-Green Deployments and Canary Releases

7 Miscellaneous

7.1 Monitoring Jenkins Pipelines executions and build statuses, Configuring Email Notifications for Failures and successes in Pipelines, Pipeline Health Check

7.2 Role based access control, Securing Credentials and Sensitive Information in Jenkins

7.3 Optimizing Performance for Data Engineering Pipelines, Scaling Jenkins Infrastructure with Distributed Builds and Agents

7.4 Jenkins Plugins, Building Custom Jenkins Plugins, Automated Data Pipelines with Jenkins

1 Introduction to Amazon Web Services (AWS) - Overview, Key Services for Data Engineering, AWS Global Infrastructure

2 Core AWS Services

2.1 Compute - Amazon EC2, AWS Lambda, AWS Fargate

2.2 Storage - Amazon S3, Amazon EBS, Amazon EFS

2.3 Networking - Amazon VPC, AWS Direct Connect, Amazon Route 53

3 Data Warehousing using Amazon Redshift - Architecture, Redshift Spectrum, Performance Tuning

4 Processing Data Lakes using AWS Lake Formation and Integrating Data Lake with other AWS Services

5 Database Services with Amazon Web Services

5.1 Relational Databases: Amazon RDS, Amazon Aurora

5.2 NoSQL Databases: Amazon DynamoDB, Amazon DocumentDB (with MongoDB compatibility)

5.3 Database Migration Service (DMS) for Data Migration

6 Big Data Processing

6.1 Amazon EMR (Elastic MapReduce) - (Setting up clusters), (Using Apache Hadoop, Spark, Hive, Presto)

6.2 AWS Glue - ETL (Extract, Transform, Load), Data Cataloging

6.3 Amazon Kinesis - Kinesis Data Streams, Kinesis Data Firehose, Kinesis Data Analytics

7 Analytics - Amazon Athena (Querying data in Amazon S3), (Integration with AWS Glue), Amazon QuickSight (Visualizations)

8 Data Integration and Orchestration - AWS Step Functions, AWS Glue Workflows, Amazon Managed Workflows for Apache Airflow

9 Machine Learning and Artificial Intelligence - Amazon SageMaker, AWS ML Services (Comprehend, Rekognition, Polly, etc.), Building Data Pipelines for Machine Learning

10 Miscellaneous

10.1 Security and Compliance - Amazon Virtual Private Cloud, Amazon Identity and Access Management, Amazon Key Management Services, AWS Shield, AWS WAF

10.2 Monitoring and Logging - Amazon CloudWatch, AWS CloudTrail, AWS Config

10.3 AWS Cost Management - AWS Cost Explorer, AWS Budgets, AWS Pricing Calculator

10.4 DevOps and Automation - AWS CodePipeline, AWS CodeBuild, AWS CodeDeploy, Infrastructure as Code (IaC) with AWS CloudFormation and AWS CDK

- Professional Soft Skills Sessions for Interview Preparation and Workplace Behavior

- Data Engineering Final Capstone Project

- FINAL EXAM

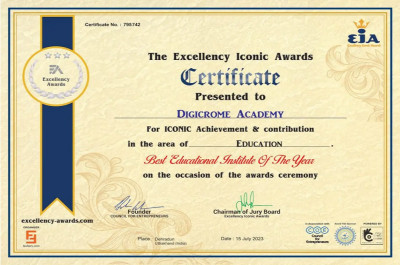

Our Certificates

Certified by